In the evolving landscape regarding artificial intelligence (AI), code generation models are revolutionizing just how software is produced, automating complex code tasks, and accelerating productivity. However, this specific progress is not really without its hazards. Zero-day vulnerabilities within AI code era systems represent some sort of significant security threat, providing hackers by having an unique opportunity in order to exploit unpatched defects before they are identified and tackled. This article delves into the methods and techniques cyber-terrorist value to exploit these vulnerabilities, shedding light source within the potential significance and mitigation strategies.

Understanding Zero-Day Weaknesses

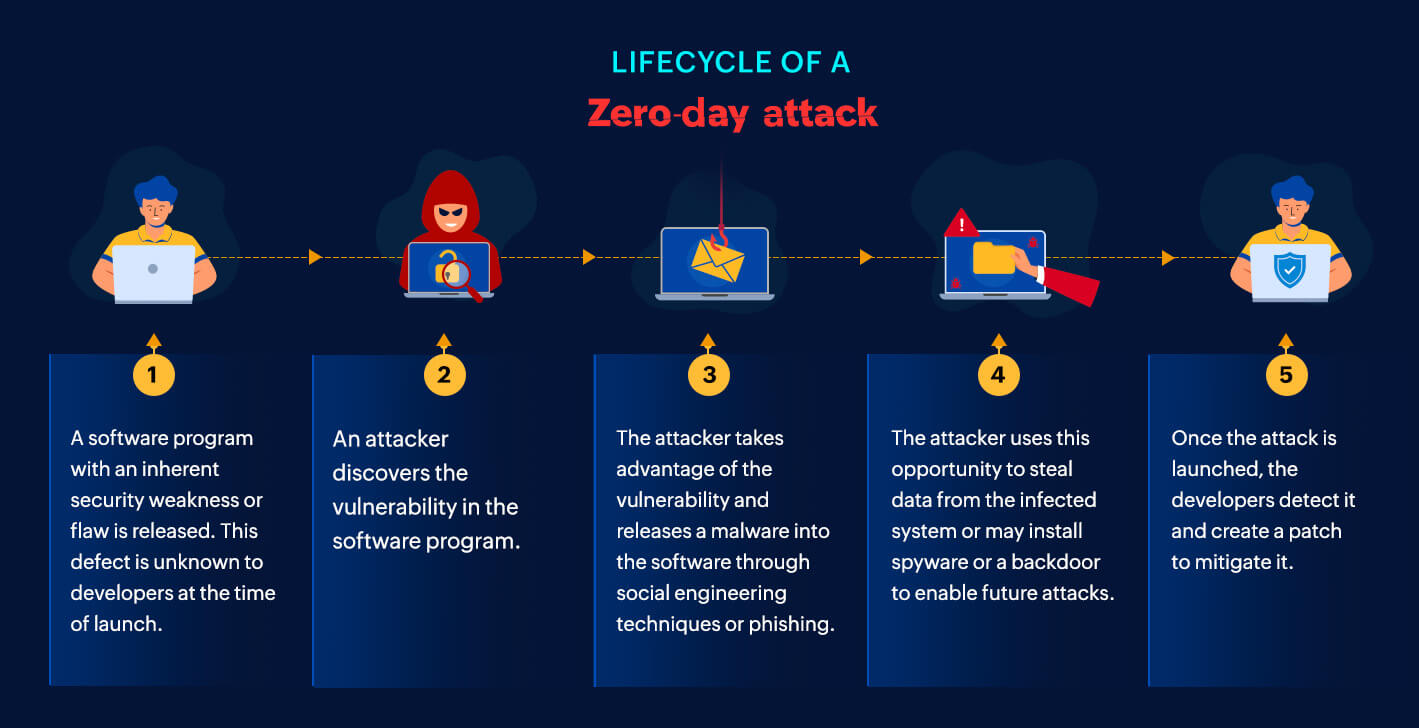

A zero-day weakness identifies a computer software flaw which is unknown to the application vendor or safety community. It will be termed “zero-day” due to the fact developers have absolutely no days to deal with the issue prior to it is used by attackers. In the context involving AI code era, a zero-day weeknesses could be a flaw in the algorithms, model architectures, or implementation of the AI technique that can be exploited to endanger the integrity, privacy, or availability of typically the generated code or even the system on its own.

How Hackers Exploit Zero-Day Vulnerabilities

Reverse Engineering AI Versions

Hackers often start by reverse engineering AI models to recognize potential weaknesses. This specific process involves examining the model’s habits and architecture to be able to understand how that generates code. By simply studying the model’s responses to numerous inputs, attackers can discover patterns or perhaps anomalies that disclose underlying vulnerabilities. With regard to instance, in the event that an AJAI model is qualified on code of which contains certain types of errors or unsafe coding practices, place be exploited to create flawed or harmful code.

Input Manipulation

One common technique is manipulating the type data provided to the AI code generation model. By creating specific inputs that will exploit known weaknesses in the design, attackers can generate the AI to generate code that will contains vulnerabilities or even malicious payloads. Such as, feeding the design with malformed or even specially crafted plugs can trick it into producing program code with security flaws or unintended functionality, which can next be exploited inside a real-world software.

Model Poisoning

Model poisoning involves inserting malicious data in to the training dataset used to develop the AI type. By contaminating the training data using examples that contain invisible vulnerabilities or destructive code, attackers may influence the model to generate problematic or compromised signal. This type of attack could be particularly challenging to detect, as the harmful patterns may simply manifest under specific conditions or inputs.

Adversarial Attacks

Adversarial attacks target typically the AI model’s decision-making process by bringing out subtle perturbations to be able to the input files that cause the model to make completely wrong or insecure choices. In the situation of code generation, adversarial attacks can manipulate the AI’s output to generate computer code that behaves at any time or contains weaknesses. These attacks take advantage of the AI model’s susceptibility to minor variations in suggestions, leading to potential security breaches.

Taking advantage of Model Bugs

Like any software, AI versions can contain pests or implementation flaws that may not necessarily be immediately obvious. Hackers can make use of these bugs to bypass security steps, access sensitive info, or induce typically the model to create excess outcomes. By way of example, in case an AI type fails to appropriately validate inputs or even outputs, attackers can easily leverage these shortcomings to compromise typically the system’s security.

Effects of Exploiting Zero-Day Weaknesses

The exploitation of zero-day vulnerabilities in AI signal generation can have far-reaching consequences:

Jeopardized Software Safety

Flawed or malicious program code generated by AI models can expose vulnerabilities into application applications, making these people at risk of further episodes. This could compromise the security and integrity of the computer software, leading to information breaches, system disappointments, or unauthorized entry.

Decrease of Trust

Used vulnerabilities can go rely upon AI-driven software program development tools plus systems. If designers and organizations can not count on AI code generation models to be able to produce secure and even reliable code, the particular adoption of such solutions may be impeded, affecting their overall effectiveness and energy.

Reputational Damage

Organizations that suffer from security breaches or vulnerabilities due in order to AI-generated code might face reputational damage and lack of buyer confidence. Addressing typically the fallout from such incidents could be pricey and time-consuming, influencing the organization’s base line and marketplace position.

Mitigation Techniques

Regular Security Audits

Conducting regular security audits of AJAI models and codes generation systems may help identify and deal with potential vulnerabilities prior to they are exploited. These audits need to include both static and dynamic analysis of the model’s behavior and developed code to uncover any weaknesses.

Robust Input Validation

Employing rigorous input approval techniques can aid prevent input mind games attacks. By validating and sanitizing just about pop over to this website for the AJE model, organizations can easily reduce the chance of exploiting weaknesses through malicious or perhaps malformed data.

Design Hardening

Hardening AJE models against identified and potential weaknesses involves applying safety measures best practices during model development and even deployment. This consists of securing training datasets, using secure coding practices, and utilizing ways to detect and even mitigate adversarial episodes.

Monitoring and Episode Reply

Continuous supervising of AI codes generation systems and even establishing an incident response plan are usually crucial for uncovering and addressing safety measures threats. Monitoring resources can help discover suspicious activities or anomalies in current, enabling swift steps to mitigate potential damage.

Collaboration and also the precise product information Sharing

Collaborating together with industry peers, analysts, and security professionals can provide beneficial insights into rising threats and very best practices for excuse vulnerabilities. Information posting initiatives can help businesses stay informed regarding the latest protection developments and enhance their defenses against zero-day attacks.

Conclusion

Zero-day vulnerabilities in AI code generation symbolize a significant protection challenge, with the particular potential for severe consequences if used by malicious stars. By understanding typically the methods and techniques hackers use to be able to exploit these weaknesses, organizations usually takes proactive measures to enhance their own security posture plus protect their AI-driven systems. Regular safety measures audits, robust type validation, model hardening, continuous monitoring, and collaboration are necessary tactics for mitigating the hazards associated with zero-day vulnerabilities and making sure the secure plus reliable operation of AI code technology technologies

Just how Hackers Exploit Zero-Day Vulnerabilities in AI Code Generation: Strategies and Techniques

06

اکتبر